Imagination Unleashed

The Astonishing World of AI Hallucinations

Give the Bot Some Shrooms

We are at a crux regarding one of the key issues in artificial intelligence is it creative, or is it just stealing content from the web and then remixing it in a consumable manner? I think the answer isn’t as clear cut either way at this moment I think if you grab some early AIs from a couple of years ago I would say a more definitive no, and if we grab some AIs from a couple of years – damn, maybe even some months– from now, I’d go for a resounding yes.

Creativity is the cornerstone of human ingenuity and probably one of the key components that led us to the dominance of our planet (and maybe of other planets in the not-so-distant future) It is the capacity to imagine things that do not yet exist and then build and rework the physical realm to match what we had in our dreams and in our brains. This is a beautiful quirk that our species has and now we are creating another intelligence on this planet that is slowly showing hints of this as well.

AI has the capacity to create new things based on past data and towards a goal that we provide for it. So by some definitions, AI is creative and this from a technical standpoint is also what is called a hallucination in the AI world. A hallucination for an AI model is a way of filling in the gaps between past previous data and how to construct a solution that might not have been said or is not in the training dataset. So, it is with these Hallucinations that AI is capable of many wonderful things(like 99% of all images I’ve used for this Newsletter, thank you Midjourney)

Are The Machines Tripping?

Now, these Hallucinations can be incredible when you’re trying to get some cool images from a generative Art AI and it interprets your prompt in a new and different direction –which is fun and great– but it can be a very complicated and even dangerous characteristic if it this happens when hundreds of millions of people are using your AI tool for their work, to write essays or to ask for instructions and assistance.

The danger of this is that, for example, if you have hundreds of thousands of people asking for medical advice for an AI, and the AI is hallucinating for a large percentage of the results it can lead to thousands of people getting faulty medical advice because they lack the knowledge and the digital literacy to even know that AIs hallucinate.

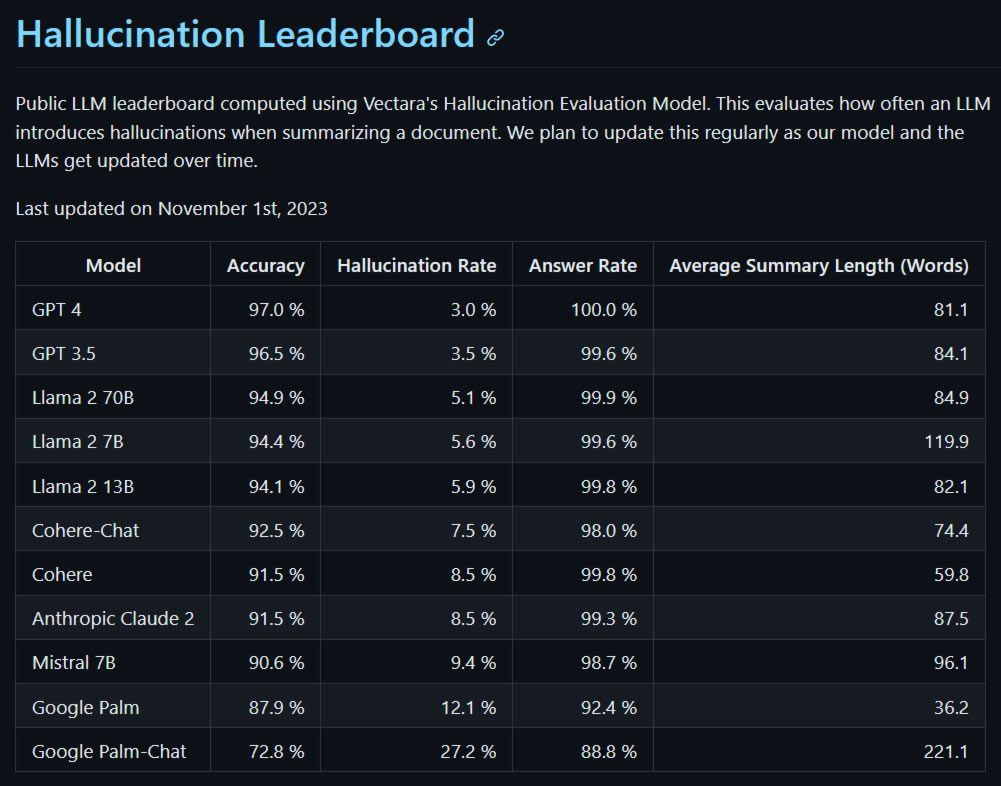

The risk is real and there are even researchers working on how to reduce hallucination for certain AI models and also to identify the ranking of hallucinations with these models to identify which models have the highest levels of hallucination.

If you want to know a bit more in-depth about AI hallucinations here is a nice article and a nice video by IBM explaining:

Although this term is a hotly debated topic in the realm of AI there are some proposals on how to eliminate or reduce hallucinations to a minimum one of the most popular solutions is a framework called Retrieval-Augmented Generation (RAG) which is a framework that focuses on grounding the AI’s with the most accurate and up-to-date information. By feeding the model with facts from an external knowledge repository in real-time, you can improve the LLM responses. This of course represents an issue of resources and time but of course, alongside better prompt engineering could improve results and I think these are great techniques for industrial or more specialized uses of AI and Large Language Models(LLMs)

On the other hand, there are researchers who claim the usage of the term hallucination is misleading in nature because some LLMs like ChatGPT4 are not Hallucinating, since that would imply that SME of the data it spits out is right and some of it is wrong like a human brain also fails sometimes to remember accurately all the information it holds, but because there is no right or wrong for the AI everything in itself is a hallucination and not just when it spits out wrong facts. And honestly, when I saw this debate it seemed more like splitting hairs but if you think otherwise let me know in the comments.

Can AI Be Creative if it Doesn’t Live off its Wealthy Parents on a Flat in Berlin?

But let’s return to the initial question is AI creative or not? Some recent research on AI creativity and its measurement highlights significant advancements and raises some philosophical questions. State-of-the-art generative AI has reached a level where it can match humans in creativity tests, opening up the possibility of augmenting human creativity across various fields. This advancement is complemented by integrating insights from psychological sciences, leading to a better understanding of creativity and fostering human-AI co-creativity in areas like dance, music, poetry, gaming, fashion, and sketching. Which to me sounds amazing thinking of human creativity being enhanced by AI and seeing the results like right now just go to some AI art creators and you can see this firsthand.

Another study demonstrated that AI chatbots achieved higher average scores than humans in the Alternate Uses Test, This is that test for creativity where you are given the command “List as many uses as you can think for a paperclip” and you get like 1 minute and depending on the number of uses if they are real and original, creative or if you consider other factors into it for your answers is a gauge of your creativity. A great answer for these is when you break away from the limitations of material and size for the paperclip in your answers and you can go wild if the paperclip can be 30 meters tall and made out of styrofoam for example.

So in this test, AI models like OpenAI's ChatGPT and GPT-4 and others, were prompted to generate creative uses for everyday objects. The assessment of creativity was based on an algorithm rating the closeness of the suggested use to the object’s original purpose and evaluations by human assessors on the creativity and originality of the responses. And humanity LOST so yeah Skynet and the Terminator are coming for our paperclips.

Although AI responses were rated higher on average, the top human responses scored better, suggesting nuances in creative capacity, ok so the top humans get to keep being creative and design paperclips, but most of humanity could just be outdone and outworked by current-level AIs.Of course, there are some dissident voices claiming that AI's performance on these tasks doesn't necessarily indicate original thought AI models could be recalling data from their training datasets, including similar tasks, rather than demonstrating genuine creativity, which is a great point to be made but how different is that from human’s creativity which is also somewhat bound by our previous experiences and other factors such as culture, language, and other parameters.

Hallucinogenic Ethics

Of course, as we said before, the uses of AI in different scenarios could add and enhance human creativity and output in economic and creative terms – which is AMAZING– but we also need to consider the risks of hallucinations and inaccuracies as AI penetrates more and more of humanity’s endeavors. We need more and better digital literacy and prompt engineering being taught at schools and to more people. But there are also other cases that we need to watch out like:

Misuse Potential: AI-generated images and videos could be manipulated to spread false information, influence public opinion, or perpetuate harmful stereotypes, especially considering the ease of dissemination through social media and other platforms. The possibility of malicious uses, such as creating deep fakes, is particularly alarming (so please never upload photos of your underage children online you have no idea the risks there)

Bias and Discrimination: AI models trained on biased data can perpetuate existing biases and discrimination, leading to ethical concerns in how these models are applied and the outputs they produce. There is a great case about this in an algorithm that was giving extremely biased results regarding the probability of felons and giving a suggestion on sentencing for criminals and it was incredibly racist.

Transparency and Accountability: The lack of transparency in AI algorithms' decision-making processes can lead to a lack of accountability for the content produced, making it challenging to detect harmful outputs or biases inherent in the AI-generated content. The so-called Black box effect we see in many LLMs where at some point even some engineers working with these models are unaware of how the AI came up with the answers for some of the prompts they were given.

Data Privacy and Protection: Reliance on large datasets for training AI algorithms raises concerns about privacy and data protection, particularly when sensitive or personal data are used without consent. Especially since we know that most AI models train on the data they get by interacting with their users. So, the next version of chatGPT, for example, might have all the interactions of its previous versions as training data to provide better output, but have you given consent that all the interactions you had with chatGPT were recorded by openAI for future use?

Impact on Creativity and Originality: The use of AI algorithms in generating content raises questions about the role of human creativity and the value of originality, especially in artistic and media-related fields. Also the legality and putting big doubts about our copyright system for the protection of intellectual property in the age of AI. So much so that artists are developing tools to “poison” Generative AIs as a way to fight back against them in what they perceive to be an overstepping and a theft of their work to be used as training data for AIs.

AI hallucinations significantly influence generative AI, a field focused on creating new and original content. While they offer the potential for increased creativity and originality, there are concerns about the accuracy and reliability of generative AI models, especially since these hallucinations are not grounded in real-world data. This raises issues about producing inaccurate or misleading outputs, and the potential to perpetuate biases or ethical concerns if trained on biased or incomplete data. Which I don’t think is that different from dumb humans who are taught wrong ideas or are raised in awful cultures/religions/environments. But unlike humans who have a limited range of influence as individuals. AI if it is not done properly could influence hundreds of millions or even billions without them even being aware.

Conclusion

Personally, I believe that AIs are in a very interesting point of inflection regarding creativity. At first, we’ve always outsourced our physical labor to machines, then we started outsourcing our “hard” intellectual calculations to computers, and now it seems like the Age of Creative Machines is upon us now we can be outsourcing our creativity to AI and what will be exclusively “human actions” or “human tasks” I don’t think there will be and the sooner we accept this fact will help us embrace sooner the need of us and our technology merging to help us accelerate towards the next steps of our species evolution. Where we can do more, think beyond our limits, and create art the likes of which we have never imagined.

What do you think about all of this? Do you believe AI is creative? Will these creative AIs be here to enhance and help us or are they just tools without a “soul” that can actually create “true” art or truly creative ideas?

Let me know while you watch this old-school AI video from 8 years ago.